One of the most distinctive features of untrustworthy journals is an effort to deliberately confuse authors by falsely proclaiming metrics that have allegedly been assigned to the journal in the databases Journal Citation Reports (JCR) and Scopus. While the JCR database calculates the impact factor for journals indexed there, Scopus calculates three metrics for their journals – CiteScore, SNIP and SJR (we discuss metrics in a separate study material).

Untrustworthy journals present on their websites the value of certain metrics, the names of which are similar to the above-mentioned metrics (e.g. Global Impact Factor, Journal Impact Factor). The aim is to lure authors into publishing their articles in an untrustworthy journal and collect article processing charges. With regard to evaluation of scholarly achievements and the author’s prestige, publishing in a journal with impact factor in particular is a great motivation. Therefore, untrustworthy journals take advantage of this and try to mislead authors by providing metrics with names similar to the metrics provided by JCR and Scopus on their websites.

In this case verifying this criterion is very easy, because any time you encounter a journal providing metrics of a similar name like impact factor, CiteScore, SNIP and SJR, just search for the journal in JCR or Scopus and check whether the journal is listed there and has a current value of the respective metrics. Here you need to remember that the values of the metrics are published with a certain delay. For example, impact factor is commonly published in June or July. Therefore, the most recent value in the first half of 2020 will be for the year 2018, and in the second half for 2019.

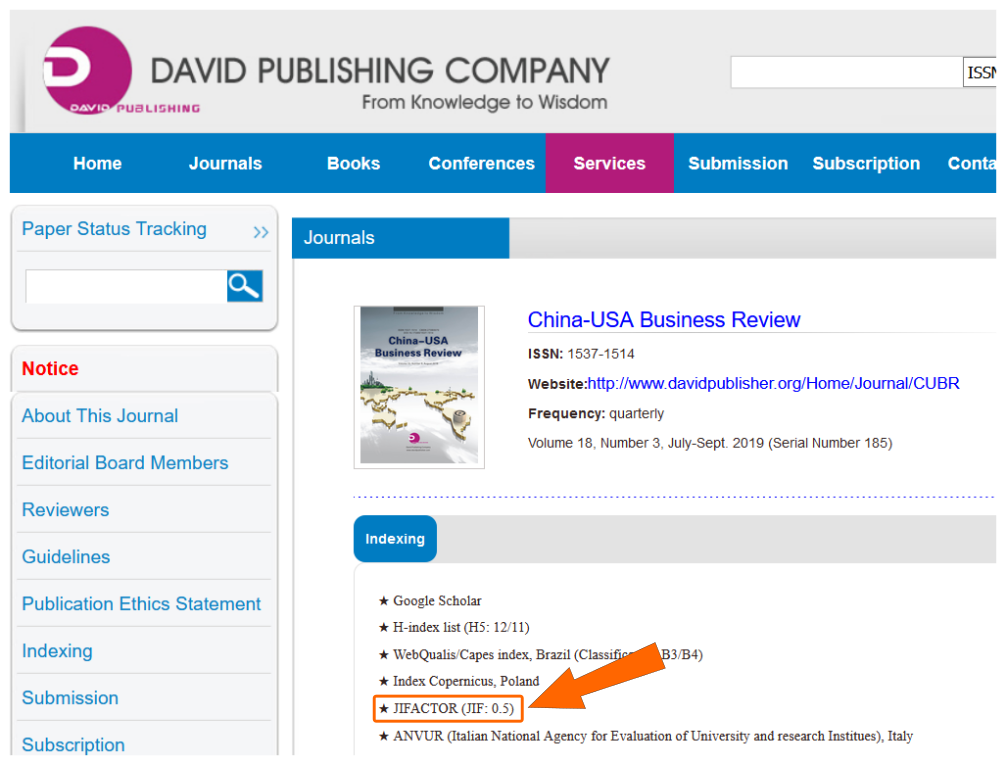

Journals commonly provide citation metrics on their website or in the section about indexation in databases. In the below-mentioned example with the journal China-USA Business Review, the metrics are provided in the section Indexing. In this case the metric’s name JIFACTOR may indicate either an effort to mislead authors by deliberately providing metrics with a name similar to the official one or providing false metrics (for more information about false metrics, see below).

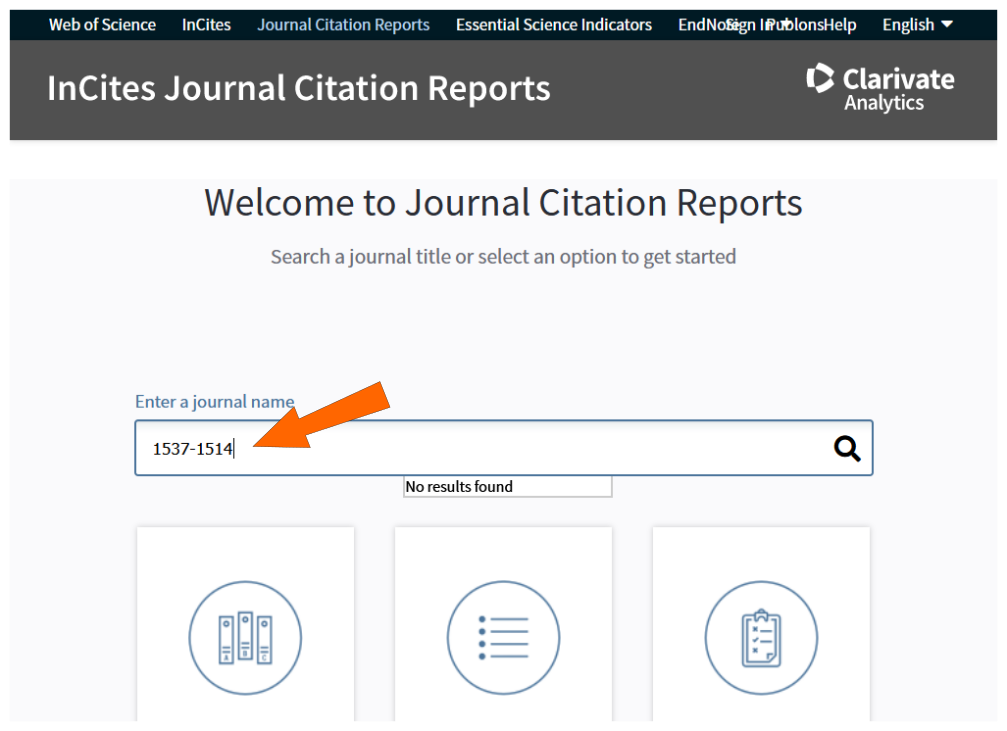

Because the text with the name of the metrics is not a link to a website with more information, we need to make sure whether the journal is listed in JCR and whether these metrics are genuine. From the figure below, it is obvious that the journal is not indexed in JCR and therefore did not meet the criterion, because it is either lying about being indexed in JCR or provides false information about the metrics.

Another example of a journal falsely claiming to have citation metrics is the Indian Journal of Advanced Nursing, which declares that it has an impact factor of 2.002 (this is a moving bar where also IBI Factor is provided – see below the section about untrustworthy metrics).

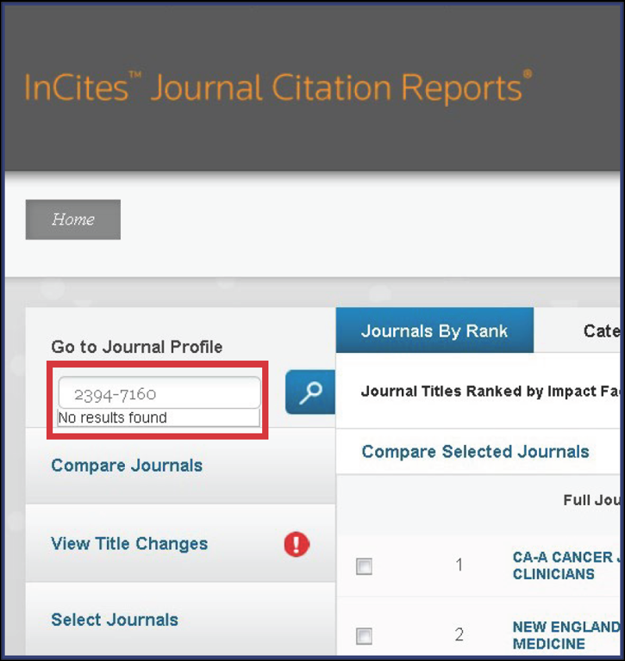

When trying to verify the value of these metrics in JCR, we learnt that this journal is not indexed in this database.

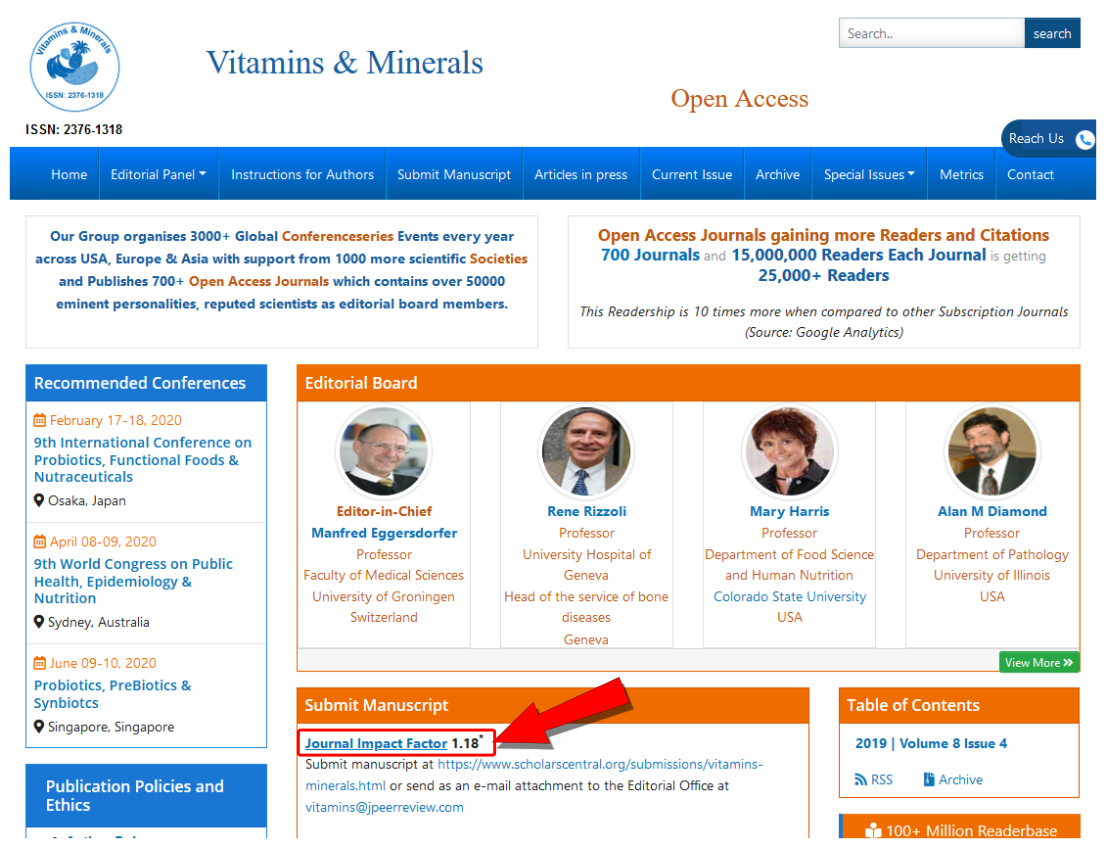

A different situation is shown in the example of the journal Vitamins & Minerals with the metric Journal Impact Factor. This may either be an attempt to imitate the name of the real impact factor from JCR with the aim to confuse authors or providing a misleading metric.

Contrary to the preceding example, here the name of the metric is a link to a website (see below) with a description of its calculation method. Although the calculation itself does not differ from the real impact factor, the basis for calculating this metric are citations from Google Scholar Citation Index database according to the information provided (see below). The journal violated the criterion Accurate information about citation metrics in Journal Citation Reports and Scopus by the fact that it used the official name of the real impact factor for its own metric, namely Journal Impact Factor. At the same time, the journal also did not comply with the criterion Providing misleading citation metrics because basing a metric on a system that uses even citations from presentations is questionable.