Untrustworthy journals try to gain the appearance of being prestigious by providing various citation metrics which have nothing in common with impact factor, CiteScore, SNIP and SJR from JCR and Scopus. Most frequent are metrics combining an adjective with the term impact factor (e.g. Global Impact Factor, General Impact Factor, IBI Factor etc.). The problem of these metrics is their lack of transparency. Their method of evaluation is either not published or is based – even partially – on a subjective evaluation of journals.

This means that if you encounter a different metric than impact factor, CiteScore, SNIP and SJR from JCR and Scopus, you should try to learn more about the metric. In the chapter about citation metrics in JCR and Scopus the journal Vitamins & Minerals with the metric Journal Impact Factor served as an example. We also explained the method by which it is calculated, which includes citation data from Google Scholar Citations. With regard to the fact that Google Scholar Citations does not distinguish whether an article is cited by properly published texts or by various other documents such as presentations, drafts, etc., the data about citation rates can be considered questionable and as a result the entire metric is irrelevant.

Another example of a misleading citation metrics is IBI factor provided by the Indian Journal of Advanced Nursing on its website which was mentioned in the part about citation metrics in JCR and Scopus.

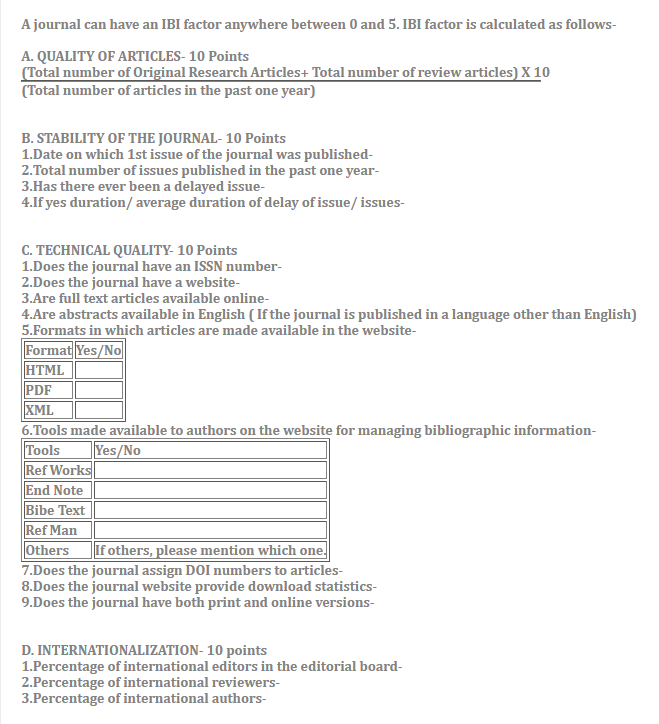

After searching on the internet the method of calculating IBI factor can be found (see a sample below). However, we find this metric controversial.

The method of calculating IBI factor is as follows: first the journal is awarded points for criteria divided into sections A to E. Then the resulting IBI Factor is calculated according to the equation:

IBI Factor can be considered a misleading metric for several reasons:

- One question is whether it is possible to evaluate almost 5,000 journals on a yearly basis in the way described above, especially due to personal issues.

- The metric is not transparent. For example, already in the first section A, it is not clear why the sum of journals should be multiplied by 10 and why the number of this year’s articles should be divided by the number of articles published the last year. In the other sections, it is not clear whether a journal receives 10 points only if it complies with all criteria, or if the points in the given section are divided by the number of criteria and journals then receive the respective number of points for the given criterion (e.g. if a journal complies with only one criterion out of four in the section B, does it receive 0 points or 2.5 points?).

- The criteria are controversial. For example, because there is no law or norm ordering that a journal should be a weekly, monthly, or yearly periodical, the criterion in section B evaluating the number of issues published last year is irrelevant. Similarly, journals are not obliged to have a website, therefore the criteria 2, 3, 5, 6, and 8 are also irrelevant.

In this way other criteria in the following sections could also be proven wrong or useless. Nevertheless, for the purpose of this material, the notes provided above suffice to demonstrate that it is vital to check metrics other than those from JCR and Scopus thoroughly.